Creation

The Start of the ALGOL Effort

Huub de Beer

August 2006

1 The start of the ALGOL effort

In October 1955, an international symposium on automatic computing was held in Darmstadt, Germany. In the 1950s, the term “automatic computing” referred to almost anything related to computing with a computer. During this symposium ‘several speakers stressed the need for focusing attention on unification, that is, on one universal, machine-independent algorithmic language to be used by all, rather than to devise several such languages in competition.’1

To meet this need the Gesellschaft für Angewandte Mathematik und Mechanik (GAMM; association for applied mathematics and mechanics) set up a subcommittee for programming languages. This committee consisted of eight members: Bauer, Bottenbruch, Graeff, Läuchli, Paul, Penzlin, Rutishauser, and Samelson.2 In 1957, it had almost completed its task; instead of creating yet another algorithmic language, however, it was decided to ‘make an effort towards worldwide unification.’3

Indeed, the need for a universal algorithmic language was not felt only in Europe. Some computer user organisations in the USA, like SHARE, USE, and DUO also wanted one standard programming language for describing algorithms. In 1957, they asked the Association of Computing Machinery (ACM) to form a subcommittee to study such a language.4 In June 1957, the committee was formed consisting of fifteen members from the industry, the universities, the users, and the federal government: Arden, Backus, Desilets, Evans, Goodman, Gorn, Huskey, Katz, McCarthy, Orden, Perlis, Rich, Rosen, Turanski, and Wegstein.5

Before this committee had held any meeting at all, a letter of the GAMM subcommittee for programming languages was send to the president of the ACM to propose a joint meeting to create jointly one international algebraic language6 instead of two different but similar languages. The ACM agreed and three preparatory meetings were held to create a proposal for the language. On the third meeting, held at Philadelphia, 18 April 1958, F.L. Bauer presented the GAMM proposal to the ACM subcommittee.7 Both proposals shared many features but the American proposal was more practical than the European counterpart that was more universal.8

The joint meeting was held at Zürich from May 27 to June 1, 1958, and was attended by F.L. Bauer, H. Bottenbruch, H. Rutishauser and K. Samelson from the GAMM subcommittee and by J. Backus, C. Katz, J. Perlis and J.H. Wegstein from the ACM counterpart. In the letter from the GAMM to the ACM where this meeting was proposed, it was stated that, ‘we hope to expand the circle through representatives from England, Holland and Sweden’.9 In the end, however, the meeting was attended by Americans, Germans and Swiss only. Nonetheless, international interest was growing, especially after the publication of the preliminary report on the new language.10

The discussions at the Zürich meeting were based on the two proposals for the new language and it was decided that:

’The new language should be as close as possible to standard mathematical notation and be readable with little further explanation.

It should be possible to use it for the description of computing processes in publications.

The new language should be mechanically translatable into machine programs.’11

In addition, the language was to be machine independent. It was designed with no particular machine in mind.

With these decisions made some problems arose because of the difference between a publication language and a programming language to instruct computers. In addition, there was disagreement on the symbols to use. For example, the decimal point was problematic: the Americans were used to a period and the Europeans to a comma.12 To solve all these representational issues Wegstein proposed to define the language on three different levels of representation: reference, hardware and publication. After this solution the joint meeting ended successfully with the publication of the Preliminary Report: International Algebraic Language.13

The Internal Algebraic Language (IAL), or ALGOL 58, as it was called later, was the result of an international effort to create a universal algorithmic language. This short history, however, does not explain why there was a need for a language like IAL. Or, for that matter, why creating a new language was preferred over using one of the other already existing algorithmic languages of that time. To answer these questions, the state of automatic computing in the 1950s is discussed in the next section. After that, the features of IAL are discussed and a comparison between IAL and some of the other algorithmic languages is made. While treating these subjects the differences between the American and European approaches towards automatic programming and programming languages in general are treated as well.

2 The need for a universal algorithmic language

2.1 The American field of computing

The need for a universal algorithmic language was stressed at the Darmstadt symposium in 1955. Almost two years earlier, in December 1953, John Backus proposed the FORTRAN project to his chief at IBM.14 The goal of this project was to create a language to formulate ’a problem in terms of a mathematical notation and to produce automatically a high speed IBM 704 program for the solution of the problem.15 In other words, FORTRAN was to be an algorithmic language with a practically usable translator: the programs generated by this system should be as efficient as hand-coded programs written in machine code or in an assembly language.

The computers of the early 1950s were instructed by using the machine’s own code, the machine code. To aid the programmer these binary machine codes were improved: the binary representation of the operations of the computing machine were replaced with meaningful symbols. Later, the operands, or addresses, of these operations were also transformed. First, the binary representation of addresses became a decimal representation. The addresses, however, were to be specified in an absolute manner, and the next improvement was the introduction of relative addressing. Finally, the addressing itself also became symbolic: many often used memory locations, like registers, were represented by a symbol. Bit by bit, machine codes evolved into assembly languages.

Although these assembly languages were an improvement over machine codes, it was not enough to prevent the programmer from making errors. To decrease the number of errors, subroutine libraries consisting of useful operations and program parts were developed.16 New programs could be written by including these subroutines in the code. Creating complex programs had now become a much easier task than writing all the code by hand.17

Another way to improve programming was using an automatic coding system. These systems extended a machine with useful features, like floating point operations and input and output operations, by providing a virtual machine that was easier to program than the real computing machine.18 The ultimate goal of the research groups creating these automatic coding systems was to create a language looking ‘like English or algebra, but which the computer could convert into binary machine instructions.’19

The biggest problem of automatic coding systems was efficiency, or the lack thereof. Programs generated with these systems were up to five times less efficient than hand-coded assembly programs.20 This resulted in an atmosphere in which the idea of automatic coding was conceived as fundamentally wrong: ‘efficient programming was something that could not be automated’21 was an often heard statement. This attitude towards automatic programming was put aptly by Backus in 1980:

‘Just as freewheeling weste[r]ners developed a chauvinistic pride in their frontiership and a corresponding conservatism, so many programmers of the freewheeling 1950s began to regard themselves as members of a priesthood guarding skills and mysteries far too complex for ordinary mortals. (…) The priesthood wanted and got simple mechanical aids for the clerical drudgery which burdened them, but they regarded with hostility and derision [to] more ambitious plans to make programming accessible to a larger population. To them, it was obviously a foolish and arrogant dream to imagine that any mechanical process could possibly perform the mysterious feats of invention required to write an efficient program.’22

Unfortunately for this priesthood programming did become accessible to a larger population during the 1950s; hundreds of computers were being built and used on a commercial basis.23 Programming in assembly language, including the inevitable debugging, accounted for almost three quarters of the total cost of running a computing machine.24 This observation was the reason why Cuthbert Hurd, Backus’s chief at IBM, accepted Backus’s proposal for the IBM Mathematical FORmula TRANslating system FORTRAN.25

Because automatic coding systems were, in the end, economically more profitable to use automatic coding became accepted. Using automatic coding systems would reduce the time spent on programming and debugging; programmers could spent more time on solving new problems and the computer could spent more time on running these programs rather than on debugging them.

Although many computers were being used for data processing and other business applications during the late 1950s, scientific computing was and would be one of the main application areas. As a result, most automatic coding systems of that time were algorithmic systems. When the attitude towards automatic coding changed in favour of higher level programming languages, ‘it almost seemed that each new computer, and even each new programming group, was spawning its own algebraic language or cherished dialect of an existing one.’26 This diversity of algebraic languages caused the need for a universal algorithmic language. A universal language would enable users of different machines to communicate about programming with each other and to share their programs.

2.2 The European field of computing

The first contribution to programming languages in Europe was made by Konrad Zuse. In the years 1945–1946, he developed a programming language called Plankalkül. This language was far more sophisticated than most of the languages developed in the 1950s and even 1960s. Among the features were procedures with input and output parameters, compound statements, records, control statements, variables, subscripts, and an assignment statement.27 His work on programming languages, however, was not recognised outside the German-speaking area until 1972.28

In 1934, Zuse started working on computing machines, and he produced three successive machines before he started with the Z4 machine.29 At the end of the Second World War, Zuse fled from Berlin to Bavaria with his Z4 computing machine. Because of the war, Zuse was unable to go on with his work on the Z4 and he started working on several theoretical ideas about computing and programming, one of these ideas was his Plankalkül.30 After the war, the Z4 was rented by the Eidgenössische Technische Hochschule (ETH) in Zürich and in 1950 it was put into operation.31 Most of the computations performed on the Z4 were numerical calculations for all kind of complex technical problems, from matrix calculations to computations on a dam.32

At that time, Heinz Rutishauser was working at the ETH. To make programming of the Z4 easier he invented a method to let the computer produce its own programs. This method was based on the fact that many programs consisted of repeating sequences of instructions with addresses changing by an underlying pattern.33 Later, Rutishauser (1952) observed that ‘den Rechenplan für eine bestimmte Formel oder Formelgruppe durch die Rechenmaschine selbst “ausrechnen” zu lassen ist, das heisst die Rechenmaschine ist als ihr eigenes Planfertigungsgerät zu benützen.’34 With “Planfertigungsgerät” Rutishauser referred to another idea of Konrad Zuse on a code generation machine or Planfertigungsgerät for his Plankalkül.35

From 1949 till 1951, Rutishauser developed an algebraic language for an hypothetical computer and two compilers for that language.36 In this language there was only one control structure: the Für statement. In the example below, the meaning and use of this statement becomes clear: Für \(k = 2 (1) n\): \(a_{nn}^{j} \times a_{1k}^{j-1} = a_{n,k-1}^{j}\). means that for all \(k\) from \(2\) till \(n\) (with step \(1\)), the value of \(a^{j}_{n,k-1}\) becomes the value of \(a^{j}_{nn}\) multiplied with the value of \(a^{j-1}_{1k}\).

A program to compute the inversion of matrix \(a^{0}_{ik}\) in Rutishauser’s language37:

Für \(i = 1\):

Für \(k = 1\): \(\frac{1}{a_{ik}^{j-1}} = a_{nn}^{j}\).

Für \(k = 2 (1) n\): \(a_{nn}^{j} \times a_{1k}^{j-1} = a_{n,k-1}^{j}\).

Für \(i = 2 (1) n\):

Für \(k = 1\): \(-a_{ik}^{j-1} \times a_{nn}^{j} = a_{i-1,n}^{j}\).

Für \(k = 2 (1) n\): \(a_{ik}^{j-1} - (a_{i1}^{j-1} \times a_{n,k-1}^{j}) = a_{i-1,k-1}^{j}\).

At the same time and in the same place, Corrado Böhm, although aware of Rutishauser’s work (not vice versa), was doing similar research. Böhm also developed an hypothetical machine and a language to instruct that machine. For the first time, however, the translator itself was written in its own language.38 In this language everything was a kind of assignment statement. For example, go to \(B\) was encoded as \(B \rightarrow \pi\): the value of \(B\) is assigned to the program counter.39

In 1955, Bauer and Samelson decided to start working on formula translation based on Rutishauser’s work.40 They invented a method to translate formulae based on the stack principle.41 At the end of 1955, after the Darmstadt symposium, the GAMM subcommittee on programming languages was set up. The members of this subcommittee, Bauer and Samelson from Münich, Bottenbruch from Darmstadt, and Rutishauser from Zürich, formed the ZMD group.42 The proposal for the new algorithmic language created by this group was influenced by their previous work on programming languages and formula translation. Actually, they developed both language and translation technique side by side, as will be explained in Section Sequential Formula Translation.

2.3 The difference between Europe and the USA and the need for universalism

The situation in Europe was totally different from the situation in the USA. In Europe the field of computing was just emerging in the late 1950s. In almost every country of Europe, research centres started with building and using their own computing machines. In the USA, on the other hand, a commercial computer industry was already selling computers to research centres, government, and industry.

The computing machines being built in Europe were intended for scientific computing and, eventually, an algorithmic language was needed to make these machines productive. In the USA, several algorithmic languages were already developed and used as a result of this need. Many research groups had created their own algorithmic language. For almost every computing machine an algorithmic language, or variant of another language, existed. In this atmosphere the need for universalism was felt.

When, in late 1958, the report on IAL was published it was received with great interest from the computing community. During the development of ALGOL 60 this enthusiasm would only grow: ALGOL was to be the language of choice for many of the computing machines being built in Europe. In America, however, the market demanded a working implementation of a good language, not an academic promise for a better language. During the development of ALGOL 60 IBM became the dominant player in the computer industry.43 FORTRAN matured into a usable and efficient language and was ported to many machines. As a result, FORTRAN became the de facto standard programming language for scientific computing.

3 Why was IAL chosen over other algorithmic languages?

In 1958, at the Zürich meeting, FORTRAN was not considered to be the ideal universal algorithmic language; IAL was developed instead. The question is: Why did it have to be IAL? Why did FORTRAN, or one of the other languages, not satisfy the those present at the Zürich meeting? Why creating a new algorithmic programming language if there were already other algorithmic languages? To answer these questions four algorithmic languages of the late 1950s are discussed and compared: IT, MATH-MATIC, FORTRAN, and IAL.

IT and MATH-MATIC are discussed because they did belong, according to Jean Sammet, to the group of automatic coding systems which were used more widely.44 Besides, these languages were created, among others, by respectively C. Katz and A.J. Perlis, both participating in the ALGOL effort. FORTRAN is chosen because it would become the de facto standard and J.W. Backus, the leader of the group developing FORTRAN, also participated in the creation of IAL at the Zürich meeting.

3.1 IT

During 1955 and 1956, ideas about an algebraic language and its compiler for the Datatron computer were developed at Purdue University Computing Laboratory. Two members of the group, A. Perlis and J.W. Smith moved to Carnegie Institute of Techology in the summer of 1956. They adapted the ideas about the language to a new machine, the IBM 650. In October 1956, the compiler was ready and named Internal Translator (IT).45 According to Knuth and Pardo (1975) this was ‘the first really useful compiler’46. It was used on many installations of the IBM 650, and it was even ported and used on some other machines too.47

| Symbol | Name | Representation |

|---|---|---|

| \((\) | Left parenthesis | L |

| \()\) | Right parenthesis | R |

| \(.\) | Decimal point | J |

| \(\leftarrow\) | Substitution | Z |

| \(=\) | Relational equality | U |

| \(>\) | Greater than | V |

| \(\geq\) | Greater than or equal | W |

| \(+\) | Addition | S |

| \(-\) | Substraction | M |

| \(\times\) | Multiplication | X |

| \(/\) | Division | D |

| \(^{\textrm{exp}}\) | General exponentiation | P |

| \(,\) | Comma | K |

| \("\) | Quotes | Q |

| Type | T | |

| Finish | F |

Although IT48 was popular, it had some disadvantages. The main problem was the primitive hardware representation of the language; only alfanumeric characters were allowed and many symbols were translated into one single character. In the list above49, some of the symbols used in IT are paired with their translation into the hardware representation. Variables were written as a number of \(I\)’s followed by a number, and, in case of floating point variables, prefixed with an \(Y\) or a \(C\). A subroutine was called by its line number followed by a comma separated list of parameters, and all this together was put between quotes.

Besides this primitive hardware representation the scanning technique used was also problematic. This process resulted in incorrect and difficult evaluation of mathematical expressions50 and it was very time consuming too.51

IT itself was a simple arithmetic language; it consisted of simple mathematical operators and some basic control structures like selection and a form of looping. The IF statement was used as: \(k\): \(G\) \(I3\) IF \((Y1 + Y2) = 9\) meaning that if the value of \((Y1 + Y2)\) is equal to \(9\) then the statement with number equal to the value of variable \(I3\) is executed, otherwise the next statement is executed. Every statement was preceded with a number lower than 626 (here represented by \(k\)).

The looping construct was written like \(k: j, v1, v2, v3, v4\). Here \(j\) is the number of the last statement of the loop, the loop itself started at statement \(k+1\). \(v1\) is the looping variable, with starting value \(v2\), step \(v3\) and end value \(v4\). For example the following program denoting the computation of \(y = \sum\limits_{i=0}^{10} a_ix^i\)52 (This example does (probably) contain errors, however, it is copied as is):

| reference | hardware | |||

|---|---|---|---|---|

| \(1\) | READ | READ | \(F\) | |

| \(2\) | \(Y2 \leftarrow 0\) | \(Y2 Z OJ\) | \(F\) | |

| \(3\) | \(4, I1, 11, -1, 1\) | \(4K I1K 11K m1K 1K\) | \(F\) | |

| \(4\) | \(Y2 \leftarrow CI1 + Y1 \times Y2\) | \(Y2 Z CI1 Y1 X Y2\) | \(F\) | |

| \(5\) | \(H\) | \(H\) | \(FF\) |

In the above example program written in the IT language. The reference version of the program is a little bit cryptic but it can be understood. Unfortunately, the hardware representation of the program is worse than cryptic. The programmer had to translate his programs by hand into the hardware representation and, as a result, using IT was difficult and error prone.

3.2 MATH-MATIC

The language AT-3 was developed by a group headed by Charles Katz at UNIVAC, starting in 1955. In April 1957, when its preliminary report was published, the language was renamed to MATH-MATIC.53 The language was intended for use at the UNIVAC I computer, a computing machine without floating point operations. For this reason, all floating point computations had to be done by subroutine calls. Another factor influencing the efficiency in a negative way was the translation of MATH-MATIC programs into A-3 programs. A-3, itself an extension of A-2, was the third version of a series of compilers for the UNIVAC I and well known for its inefficiency. This series of compilers was created by the group headed by Grace Hopper.54.

Below is the TPK algorith in MATH-MATIC.55 The TPK algorithm is not a useful algorithm, but it is used in Knuth and Pardo’s article in which they compare and discuss different early programming languages.

| (1) | READ-ITEM A(11) . |

| (2) | VARY I 10 (-1) 0 SENTENCE 3 THRU 10 . |

| (3) | J = I \(+\) 1 . |

| (4) | Y = SQR \(|\) A(J) \(|\) \(+\) 5 \(*\) A(J)\(^3\) . |

| (5) | IF Y \(>\) 400, JUMP TO SENTENCE 8 . |

| (6) | PRINT-OUT I, Y . |

| (7) | JUMP TO SENTENCE 10 . |

| (8) | Z = 999 . |

| (9) | PRINT-OUT I, Z . |

| (10) | IGNORE . |

| (11) | STOP . |

Although the MATH-MATIC implementation was very inefficient, the language did have some interesting features. First and foremost, it was very readable: control statements were written as English words or phrases and expressions were written in the standard mathematical notation. Besides basic control statements the language also contained over twenty input and output statements.56

For each of the relational operators \(<\), \(>\), \(=\), and two combinations of these operators, there was a different IF statement. There were also five looping constructs, all variations of the start-step-end variant, and one variation with a list of values to iterate over.57

The biggest problem for the acceptation of MATH-MATIC was the small number of UNIVAC machines in use and, hence, the small number of users of the language. Sammet remarks rightly that this language could have become the standard for scientific computing if it would have been implemented on a more popular and powerful machine.58

3.3 FORTRAN

As said earlier, in December 1953, J.W. Backus proposed FORTAN to his chief at IBM.59 The idea behind the FORTRAN automatic coding system was that programs written in FORTRAN would run as efficient as hand-coded programs in a machine code or in an assembly language.60 Initially the language was designed for the IBM 704 and some of the statements in the language reflected this machine directly. For example, the statement IF (SENSE LIGHT \(i\)) \(n1\) \(n2\):61 if sense light on the IBM 704 is burning, the next statement executed is \(n1\); in the case the light is off, the next statement is \(n2\).

Besides these machine dependent statements (there were six of them), many input and output statements were included. Famous was the FORMAT statement. With this statement it was possible to define and use formatted input and output. Among the control statements were an IF statement and a DO statement. The statement IF \((a) n1, n2, n3\) denotes that the next statement executed is respectively \(n1\), \(n2\), or \(n3\) if the value of \(a\) is less than, equal to, or greather than \(0\). The use of these statements is clarified in the example program TPK written in FORTRAN62:

| C | THE TPK ALGORITHM, FORTRAN STYLE |

| FUNF(\(T\)) = SQRTF(ABSF(\(T\)))\(+5.0*T**3\) | |

| DIMENSION A(\(11\)) | |

| 1 | FORMAT (\(6F12.4\)) |

| READ \(1, A\) | |

| DO \(10 j = 1,11\) | |

| \(I = 11 - J\) | |

| \(Y =\) FUNF\((A(I+1))\) | |

| IF \((400.0-Y) 4,8,8\) | |

| 4 | PRINT \(5, I\) |

| 5 | FORMAT (\(I10, 10H\) TOO LARGE) |

| GO TO 10 | |

| 8 | PRINT \(9, I, Y\) |

| 9 | FORMAT \((I10, F12.7)\) |

| 10 | CONTINUE |

| STOP \(52525\) |

In June 1958, the second version of FORTRAN was released. The main improvement was the inclusion of subroutines and functions in the language itself. In addition, programs written in assembly language could be linked directly into FORTRAN programs. This new version was also made available on other IBM machines (the IBM 709 and IBM 650 in 1958; the IBM 1620 and IBM 7070 in 1960). In the 1960s, it was implemented on machines of other manufacturers as well. When the language evolved to FORTRAN IV it became quite commonplace. Unfortunately, the different FORTRAN implementations were not always compatible with each other.

3.4 IAL

The history of the development of IAL has already been told in Section Start of the ALGOL Effort. The development distinguished itself from the development of the other languages because it was created at a meeting by two different committees: the ACM subcommittee and the GAMM subcommittee. In addition, these two committees were from different countries, respectively the USA and the German-speaking countries in Europe. Finally, IAL was designed with no particular machine in mind, it was machine independent.

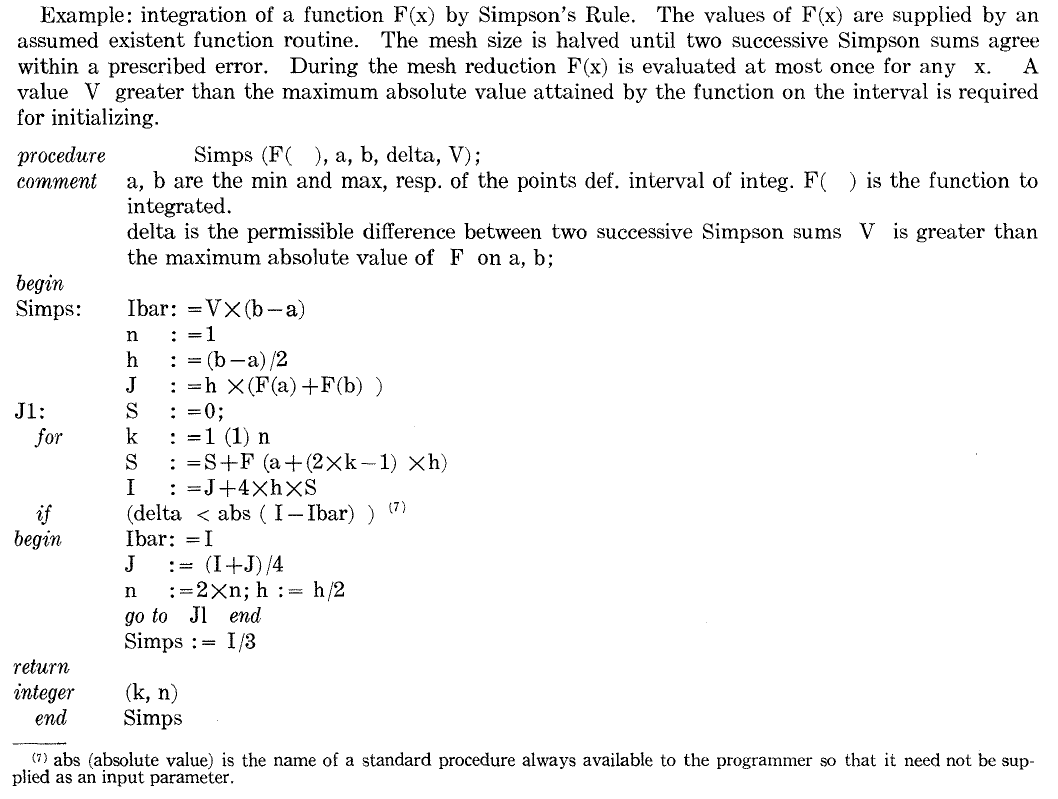

One of the most interesting features of IAL was the compound

statement. A sequence of statements separated by semicolons was treated

as one statement when it was enclosed by the keywords begin

and end. It fitted very well in combination with control

structures like the if statement and the for

statement.

In the above Figure, the use of these control structures and the

compound statement is exemplified. An if statement is

formed as if B, with B a boolean expression.

If B is true, the next statement is executed, otherwise the

next statement is omitted. The for statement is formed

accordingly. for i := 0 (1) N means that to the variable

i, the values between 0 and N are

assigned iteratively. That is, for every value, the for

statement following the statement is executed once.

One of the problems of the language was the total lack of input and output statements. Because IAL was to be used to describe computational processes only these statements were considered unnecessary.64 Another problematic aspect was the way procedures were defined. In Section ALGOL 60’s notation this problem is explained in more detail.

3.5 Why did IAL have to be the international algebraic language?

At the start of this section, the question was asked why IAL did have to be the universal algorithmic language. After comparing four algorithmic languages this question can be answered.

First of all, IT, MATH-MATIC, and FORTRAN were machine dependent. One of the goals of the ALGOL effort was to create a machine independent language. Of course, one of the already existing language could have been used as a basis for a new machine independent language.

FORTRAN was, by far, the most used language due to the dominance of IBM in the computing industry. Exactly this dominance was the reason for the American subcommittee to ignore the existence of FORTRAN. Choosing FORTRAN as basis for their proposal would only increase the dominant position of IBM.65 Ironically, FORTRAN would become the de facto universal algorithmic language in the early 1960s.

Second, both IT and MATH-MATIC had some serious problems. IT did not satisfy because of its its primitive hardware representation. The language was too cryptic to be usable as the universal algorithmic language; one of the initial goals of IAL was that it should resemble standard mathematical notation as much as possible. MATH-MATIC, on the other hand, satisfied this requirement very well, it was a neat language. Most of its statements were less machine dependent than the statements in FORTRAN and it had good input and output facilities. Unfortunately, MATH-MATIC’s implementation was very inefficient and the language was not very well known; the number of UNIVAC installations and, because of that, the number of MATH-MATIC systems and users was just too small.

Finally, and most importantly, the Europeans had no reason whatsoever to choose an American language as the basis for their proposal. Actually, the result of the Zürich meeting would be a combination of the American and European proposals. As both subcommittees did not choose an existing American programming language the universal international algorithmic language had to be a new language.

The new language, however, shared most of its features with the already existing languages. There was a widespread agreement on the typical elements that should be included in an algorithmic language: algebraic expressions and control structures like selection and a looping construct.

The reason why IAL did have to be the universal algorithmic language was not that the other languages were bad, they just did not satisfy the requirements for an international and universal language.

4 Conclusion

This chapter started with a short history on the start of the ALGOL effort. In the middle of the 1950s, there was a need for a universal algorithmic language and in both the USA and the German-speaking countries of Europe a committee was set up to create a proposal for such a language. In 1958, at the Zürich meeting, these proposals were combined into a new language, called IAL. Although this history seemed to be complete, some questions can be asked: why was there a need for a universal algorithmic language and why did it have to be IAL?

The answer to the first question can only be given by describing the differences between USA and Europe. In the USA the field of computing became a professional field in the 1950s. There was a computer industry selling computers to companies, the government and some research centres. In this commercial atmosphere it became clear that problem-oriented programming languages were needed to make profitable use of computers.

Unfortunately, these programming languages were not accepted by many programmers because they were slow and generated inefficient programs. It was believed that creating efficient programs could not be automated. J.W. Backus, however, did believe that this was possible, and he proposed the FORTRAN system to his chief at IBM. Indeed, this system would eventually generate programs that ran almost as efficiently as hand-coded programs. Because problem-oriented languages were seen as a necessity, many research groups and computer companies started creating such languages. This development resulted in a huge diversity of similar languages and in this situation the cry for universalism arose.

In Europe, the situation was different. The field of computing was emerging, and in many countries the first computers were being built in the late 1950s. The main application for these machines was scientific computing. Instructing these computers was a difficult and error prone task. To solve this problem, work was started on formula translation and eventually on an algorithmic language. After the international Darmstadt symposium in 1955, Rutishauser, Bauer, Samelson, and Bottenbruch started working on an algorithmic language. Instead of creating yet another algorithmic language, they proposed to jointly create an international algebraic language to the ACM.

Both the Americans and the Europeans did not base their proposal on an already existing language. IT and MATH-MATIC were not sufficient or well known. FORTRAN, on the other hand, was well known, but the Americans wanted to undermine the dominance of IBM. Hence, the ACM subcommittee proposed a new language. The combination of the two proposals became IAL: a new algorithmic language like other algorithmic languages.

The reason why IAL did have to be the international algebraic language was not that it was better than the other programming languages, but that it was machine independent and a compromise between two computing communities with the potential to become part of a truly international effort.

References

Heinz Rutishauser, Description of ALGOL 60, ed. F. L. Bauer et al., vol. 1, Handbook for Automatic Computation (Berlin: Springer-Verlag, 1967), 5.↩︎

Peter Naur, “Transcripts of Presentations,” in HOPL-1: The First ACM SIGPLAN Conference on History of Programming Languages (New York, NY, USA: ACM Press, 1978), 148, Frame 3.↩︎

Rutishauser, Description of ALGOL 60, 1:5.↩︎

R. W. Bemer, “A Politico-Social History of Algol,” in Annual Review in Automatic Programming, ed. Mark I. Halpern and Christopher J. Shaw, vol. 5 (London: Pergamon, 1969), 160.↩︎

Alan J. Perlis, “The American Side of the Development of Algol,” in HOPL-1: The First ACM SIGPLAN Conference on History of Programming Languages (New York, NY, USA: ACM Press, 1978), 4–5, doi:10.1145/800025.808369.↩︎

Bemer, “A Politico-Social History of Algol,” 160.↩︎

Perlis, “The American Side of the Development of Algol,” 5.↩︎

Rutishauser, Description of ALGOL 60, 1:5.↩︎

Bemer, “A Politico-Social History of Algol,” 161.↩︎

Rutishauser, Description of ALGOL 60, 1:6.↩︎

A. J. Perlis and K. Samelson, “Preliminary Report: International Algebraic Language,” Commun. ACM 1, no. 12 (1958): 9, doi:10.1145/377924.594925.↩︎

Perlis, “The American Side of the Development of Algol,” 6.↩︎

Perlis and Samelson, “Preliminary Report: IAL.”↩︎

John Backus, “The History of FORTRAN i, II, and III,” in HOPL-1: The First ACM SIGPLAN Conference on History of Programming Languages (New York, NY, USA: ACM Press, 1978), 166, doi:10.1145/800025.808380.↩︎

Programming Research Group IBM, Preliminary Report – Specifications for the IBM Mathematical FORmula TRANslating System FORTRAN (New York: IBM, 1954), 1, http://community.computerhistory.org/scc/projects/FORTRAN/BackusEtAl-Preliminary Report-1954.pdf.↩︎

Martin Campbell-Kelly, Computer: A History of the Information Machine (BasicBooks, 1996), 186.↩︎

Jean E. Sammet, Programming Languages: History and Fundamentals, Series in Automatic Computation (Englewood Cliffs, N. J.: Prentice-Hall, 1969), 3–4.↩︎

Backus, “The History of FORTRAN i, II, and III,” 165.↩︎

Campbell-Kelly, Computer, 187.↩︎

Backus, “The History of FORTRAN i, II, and III,” 165.↩︎

Ibid.↩︎

John Backus, “Programming in America in the 1950s – Some Personal Impressions,” in A History of Computing in the Twentieth Century, ed. N. Metropolis, J. Howlett, and Gian-Carlo Rota (Academic Press, 1980), 127–28.↩︎

Saul Rosen, “Programming Systems and Languages. A Historical Survey,” in Programming Systems and Languages, ed. Saul Rosen (London: McGraw-Hill, 1967), 3.↩︎

Backus, “The History of FORTRAN i, II, and III,” 166.↩︎

Campbell-Kelly, Computer, 188.↩︎

Perlis, “The American Side of the Development of Algol,” 4.↩︎

Friedrich L. Bauer, “Between Zuse and Rutishauser – the Early Development of Digital Computing in Central Europe,” in A History of Computing in the Twentieth Century, ed. N. Metropolis, J. Howlett, and Gian-Carlo Rota (Academic Press, 1980), 514–15; Konrad Zuse, “Some Remarks on the History of Computing in Germany,” in A History of Computing in the Twentieth Century, ed. N. Metropolis, J. Howlett, and Gian-Carlo Rota (Academic Press, 1980), 620–27; F. L. Bauer and H. Wössner, “The ‘Plankalkül’ of Konrad Zuse: A Forerunner of Today’s Programming Languages,” Commun. ACM 15, no. 7 (1972): 678–85, doi:10.1145/361454.361515.↩︎

Donald E. Knuth and Luis Trabb Pardo, “Early Development of Programming Languages,” in Encyclopedia of Computer Science and Technology, ed. Jack Belzer, Albert G. Holzman, and Allen Kent, vol. 7 (Marcel Dekker INC., 1975), 425.↩︎

Zuse, “Some Remarks on the History of Computing in Germany,” 611–12.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 424.↩︎

H. R. Schwarz, “The Early Years of Computing in Switzerland,” Annals of the History of Computing 3, no. 2 (1981): 121.↩︎

Ibid., 123–25.↩︎

Ibid., 125.↩︎

H. Rutishauser, “Automatische Rechenplanfertigung Bei Programmgesteuerten Rechenmaschinen,” Z. Angew. Math. Mech. 32, no. 3 (1952): 312.↩︎

Bauer, “Between Zuse and Rutishauser,” 516.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 438–39.↩︎

Rutishauser, “Automatische Rechenplanfertigung Bei Programmgesteuerten Rechenmaschinen,” 313.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 440.↩︎

Ibid., 443.↩︎

K. Samelson and F. Bauer, “The ALCOR Project,” in Symbolic Languages in Data Processing: Proc. Of the Symp. Organized and Edited by the Int. Computation Center, Rome, 26-31 March 1962, ed. Gordon and Breach (New York, 1962), 207.↩︎

Friedrich L. Bauer, “From the Stack Principle to ALGOL,” in Software Pioneers: Contributions to Software Engineering, ed. Manfred Broy and Ernst Denert (Berlin: Springer, 2002), 30–33.↩︎

Ibid., 34.↩︎

Kenneth Flamm, Creating the Computer (The Brookings Institution, 1988), 102.↩︎

Sammet, Programming Languages, 134.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 474–75.↩︎

Ibid., 475.↩︎

Rosen, “Programming Systems and Languages. A Historical Survey,” 7.↩︎

Sammet, Programming Languages, 139–43.↩︎

Ibid., 139.↩︎

Ibid.↩︎

Rosen, “Programming Systems and Languages. A Historical Survey,” 7.↩︎

Sammet, Programming Languages, 141.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 479.↩︎

Ibid., 452–55.↩︎

Ibid., 479.↩︎

Sammet, Programming Languages, 136.↩︎

Ibid.↩︎

Ibid., 137.↩︎

Backus, “The History of FORTRAN i, II, and III,” 166.↩︎

Ibid., 167.↩︎

Sammet, Programming Languages, 143–72.↩︎

Knuth and Pardo, “Early Development of Programming Languages,” 478.↩︎

Perlis and Samelson, “Preliminary Report: IAL,” 22.↩︎

Sammet, Programming Languages, 175.↩︎

Rosen, “Programming Systems and Languages. A Historical Survey,” 10.↩︎